A large portion of the web still relies on REST APIs, 93.4% of API Developers Are Still Using REST and yet the developer community is going gaga over gRPC and for good reason (which we’ll explore shortly).

gRPC History

From about 2001, Google created a general-purpose RPC infrastructure called Stubby to connect the large number of microservices running within and across its data centers. In March 2015, Google decided to build the next version of Stubby and make it open source. The result was gRPC.

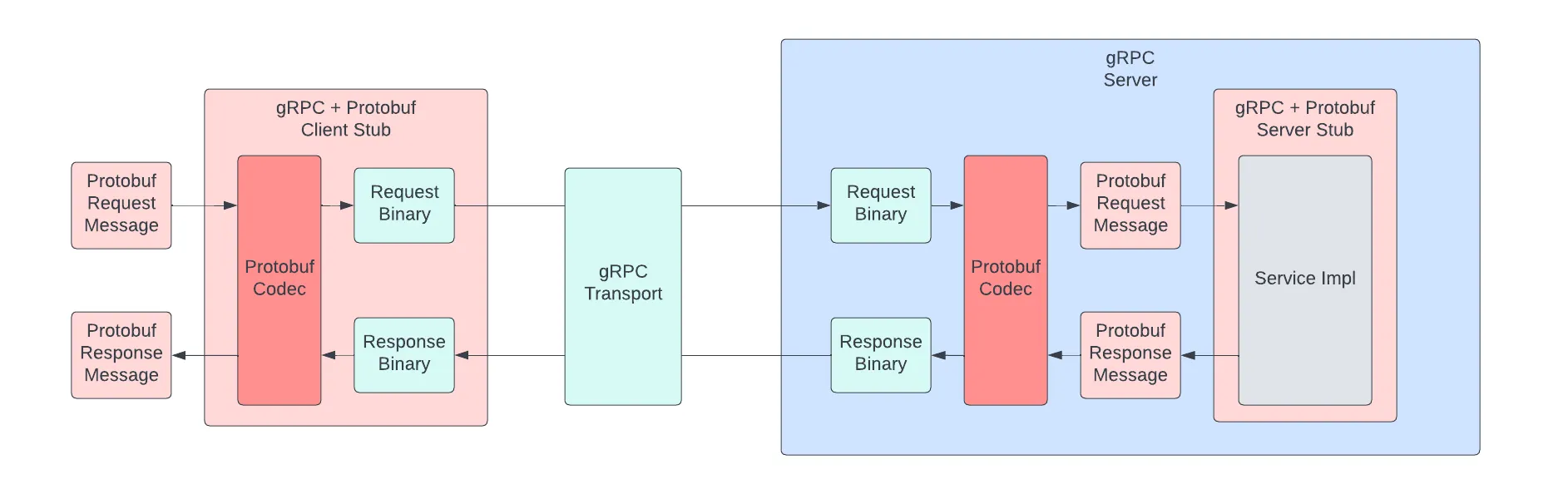

gRPC is a cross-platform high-performance RCP (Remote Procedure Call) framework on top of RPC which follows the same client-server model and used the TCP protocol. Let’s dissect RPC.

What is RPC?

RPC lets a program call a function located in another program is in the same computer or a program running on a remote machine as if it were a local function. It works on the same Client-Server model of request and response and mostly uses the same TCP connection. Take a note that I said “mostly TCP”!

Bruce Jay Nelson is generally credited with coining the term “Remote Procedure Call” in 1981.

Why gRPC Shines?

gRPC is a modern open source high performance Remote Procedure Call (RPC) framework that can run in any environment. It can efficiently connect services in and across data centers with pluggable support for load balancing, tracing, health checking and authentication. It is also applicable in last mile of distributed computing to connect devices, mobile applications and browsers to backend services.

The above statements are hard to absorb especially if you are reading it for the first time. There are several features that makes gRPC so desirable in current era. Let’s dive into the prominent features and we will understand how powerful gRPC can be and how it scales effortlessly.

Let’s try to understand things in comparisons with REST for the highlighted features of gRPC.

The transport layer: HTTP/2

Though there are several different protocols out there, the web is still mostly running on HTTP when it comes to APIs. The HTTP protocol has seen more changes in the last few years than it did in the previous two decades, with HTTP/3 now being the latest version. gRPC is built on top of HTTP/2 instead of the HTTP/1.1 that most REST APIs use and the differences between those two versions are pretty striking.

| gRPC | REST |

|---|---|

| Built directly on HTTP/2, enabling modern transport capabilities. | Typically relies on HTTP/1.1, which lacks advanced transport features. |

| Supports multiple parallel requests over a single connection—no head-of-line blocking. | Processes one request per connection, leading to potential bottlenecks. |

| Uses HPACK compression, reducing header size and improving efficiency. | Sends full headers for each request, increasing bandwidth usage. |

| Persistent connection optimizes long-lived communication patterns. | Often re-establishes or reuses connections without multiplexing. |

| Lower latency and higher throughput due to binary framing and efficient transport. | Higher latency, especially under high load, because of HTTP/1.1 limitations. |

| —————————————————————————————— | —————————————————————————- |

Using HTTP/2 dramatically boosts gRPC performance: it often processes requests several times faster and cuts latency by more than half compared to REST over HTTP/1.1.

The data format: Protocol Buffers

For a long time developers have been focusing on the data format which is convenient for human in order to ease development, debugging and representation but the formats like XML and JSON are text based, heavy and slow to serialize and deserialize!

| gRPC | REST |

|---|---|

| Uses Protocol Buffers, a compact binary format optimized for speed and efficiency. | Typically uses JSON, a verbose text-based format readable but larger. |

| Extremely fast due to binary encoding and schema-driven mapping. | Slower due to text parsing and less strict structure. |

| Very small payloads, ideal for high-throughput systems or bandwidth-constrained environments. | Larger payloads, increasing network usage and latency. |

Strongly typed using .proto files, ensuring contract consistency and backward compatibility. |

Lacks a built-in schema; validating structure requires external tools like OpenAPI. |

| Schema enforcement catches issues at compile time. | Errors often surface at runtime due to loosely typed formats. |

| ———————————————————————————————- | ———————————————————————————– |

Understanding via example is a good way to see the difference. Let’s look at a sample JSON and Proto data type example of a bare minimum User object.

1 | { |

The same when we will try to represent using a ProtoBuffer we will need to define a message User and then serialize it to binary.

1 | syntax = "proto3"; |

The data after serializing will be in binary and will look like:

1 | 08 7b 12 05 41 6c 69 63 65 1a 11 61 6c 69 63 65 40 65 78 61 6d 70 6c 65 2e 63 6f 6d 20 01 |

You will be surprised to witness the computation numbers for JSON vs ProtoBuffer for 1M objects. Here’s the table:

| JSON | Protocol Buffer | |

|---|---|---|

| Serialization Speed (1M objects) | ~550 ms | ~90 ms |

| Deserialization Speed (1M objects) | ~780 ms | ~110 ms |

| Message Size (per object) | ~70 bytes | ~35 bytes |

| Message Size (1M objects) | ~70 MB | ~35 MB |

ProtocolBuffers significantly outperform JSON, offering 6× faster serialization, 7× faster deserialization, and reducing message size by about 50%, ProtocolBuffers delivers 5–7× higher throughput while transmitting only half the data.

Getting the itch to jump into gRPC already? Hold on, there’s something more you’ll want to know before you dive in.

Real Time: Streaming

The demand for real-time web applications has increased significantly in the recent years. With time we are going impatient and to deal with this, software engineers are pushing limits to make things almost instantaneous. Google has sensed that long back and hence gRPC comes with built-in support for streaming while achieving real-time communication with REST often requires additional setup, tooling, or entirely different protocols such as WebSockets.

| gRPC | REST |

|---|---|

| Fully supports unary, server streaming, client streaming, and bidirectional streaming, all natively. | REST is fundamentally request/response, requiring workarounds for streaming. |

| Handles live updates smoothly with low latency, ideal for messaging, telemetry, and real-time dashboards. | Achieved only via WebSockets, SSE, or polling, which add complexity. |

| Uses a single long-lived HTTP/2 connection enabling continuous data flow. | Typically relies on multiple independent HTTP requests. |

| Excellent for chat systems, video feeds, IoT devices, file transfers, or anything event-driven. | Best for stateless CRUD operations or non-real-time interactions. |

| Efficient and scalable due to binary encoding and persistent streams. | Can be resource-intensive and harder to scale when attempting real-time streaming. |

gRPC supports streaming primarily because of HTTP/2’s multiplexing, bidirectional streams, combined with gRPC’s own message framing on top of Protobuf.

The platform: Cloud Native

Cloud-native is a way of designing and building software that fully leverages cloud platforms to run scalable, flexible, and highly available applications across public, private, or hybrid environments. Cloud-native principles encourage building loosely coupled, modular services that are easy to scale, observe, and operate.

This modular approach also makes systems easier to monitor, debug, and manage, since each piece can be tracked and optimized on its own.

| gRPC | REST |

|---|---|

| Designed for service-to-service communication, integrating smoothly into distributed architectures. | Widely supported but not optimized for the demands of microservices by default. |

| Native support for deadlines, retries, cancellations, and error propagation. | These must be implemented manually or managed by external libraries. |

| Works extremely well with Envoy, Istio, Linkerd, benefiting from advanced routing and observability. | Compatible with meshes but often needs additional proxies or transformations. |

| High-performance due to efficient encoding, multiplexing, and streaming—ideal for large-scale microservices. | Adequate performance but with higher overhead from JSON and HTTP/1.1. |

| Strong API contracts, auto-generated client/server code, and consistent tooling across languages. | Flexible but more manual; client/server code generation depends on external tooling. |

gRPC is a CNCF incubation project

gRPC Adoption

If you’re building high-throughput backend systems, gRPC naturally stands out as an excellent fit. Its performance characteristics align perfectly with the needs of modern microservices architectures, which is why so many organizations adopt it for internal, service-to-service communication. Combined, these advantages make gRPC a compelling choice for both developers and teams when selecting the right API technology for scalable, cloud-native systems.

The availability of amazing tooling support and auto generation of code from ProtocolBuffers for almost all popular programming language is really pushing the adoption og gRPC. More and more organizations are moving to gRPC including major engineering companies such as Netflix, Square, Lyft, Shopify, Dropbox, and Cisco to name a few.

gRPC isn’t a Silver Bullet

Until now you saw the good and great parts of gRPC, the surprising numbers, the rapidly increasing adoption but… but.. but..

gRPC has a few challenges that you cannot and should not ignore. First of all every technology should be looked at with use cases and trade-offs glasses so that we can make practical and impactful decisions which in future should not become a baggage. A few things you should consider:

Learning Curve

This is the most important aspect for adoption of a technology and it’s success in the organization. While gRPC delivers strong performance, strict contracts, and great tooling, adopting it introduces a non-trivial learning curve. The major hurdles teams will face can be:

- Developer’s familiar with JSON’s flexibility will find the protobuf’s schema first and number-indexed fields initially strict and unfamiliar.

- The build chain becomes more complex, and developers must understand generated stubs, interfaces, and client/server scaffolding.

- Teams must unlearn REST habits such as overloading GET/POST verbs—and adopt a more method-centric API design style, thinking in terms of service boundaries.

- Streaming adds complexity in debugging, performance tuning, and error handling—especially compared to simple request/response REST APIs.

Limited Browser Support

Browsers can’t communicate via native gRPC because they can’t open raw HTTP/2 frames. Currently we need to hook gRPC-Web in between which:

- Requires a proxy

- Doesn’t support full bidirectional streaming.

This is why if your application is front-end heavy, it would be challenging to integrate gRPC.

Complex Deployment Setup

gRPC works best with modern infrastructure, the legacy systems may struggle. Load balancers or proxies must support HTTP/2. Traditional API gateways often don’t handle gRPC without special configuration.

Tricky to Inspect & Debug

The underlying data type is binary and we can’t easily read requests/responses without tools. Manual testing is hard as we need gRPC clients or CLI tools. There is substantial gap in tooling support to make things as easy as it is to inspect and debug REST APIs.

Terrible as Public APIs

gRPC is optimized for machine-to-machine communication but not for humans. Browsers don’t support gRPC natively (only gRPC-Web as a workaround). JSON is more universal, practical and wise for external consumers. This is not going to change anytime soon.

Conclusion

gRPC is a must have tool in your arsenal if you are planning or already dealing with high-throughput distributed systems which are cursed with latency, service communication, occasional failures due to high resource consumption. But this cannot happen overnight or in matter of weeks.

This transition takes time, discipline, and a willingness to evolve how your team builds distributed systems. The payoff, however, is significant, a faster, more resilient, cloud-native foundation that can support the demands of modern microservices at scale.

I hope you have enjoyed learning the internal details and flows. Stay tuned for the upcoming wite-ups.

Stay healthy, stay blessed!